Logic was originally conceived as a way to characterize human arguments—in which the concept of “truth” has always seemed quite central. And when logic was applied to the foundations of mathematics, “truth” was also usually assumed to be quite central. But the way we’ve modeled mathematics here has been much more about what statements can be derived (or entailed) than about any kind of abstract notion of what statements can be “tagged as true”. In other words, we’ve been more concerned with “structurally deriving” that “1+1=2” than in saying that “1+1=2 is true”.

But what is the relation between this kind of “constructive derivation” and the logical notion of truth? We might just say that “if we can construct a statement then we should consider it true”. And if we’re starting from axioms, then in a sense we’ll never have an “absolute notion of truth”—because whatever we derive is only “as true as the axioms we started from”.

One issue that can come up is that our axioms might be inconsistent—in the sense that from them we can derive two obviously inconsistent statements. But to get further in discussing things like this we really need not only to have a notion of truth, but also a notion of falsity.

In traditional logic it has tended to be assumed that truth and falsity are very much “the same kind of thing”—like 1 and 0. But one feature of our view of mathematics here is that actually truth and falsity seem to have a rather different character. And perhaps this is not surprising—because in a sense if there’s one true statement about something there are typically an infinite number of false statements about it. So, for example, the single statement 1+1=2 is true, but the infinite collection of statements 1+1=n for any other n are all false.

There is another aspect to this, discussed since at least the Middle Ages, often under the name of the “principle of explosion”: that as soon as one assumes any statement that is false, one can logically derive absolutely any statement at all. In other words, introducing a single “false axiom” will start an explosion that will eventually “blow up everything”.

So within our model of mathematics we might say that things are “true” if they can be derived, and are “false” if they lead to an “explosion”. But let’s say we’re given some statement. How can we tell if it’s true or false? One thing we can do to find out if it’s true is to construct an entailment cone from our axioms and see if the statement appears anywhere in it. Of course, given computational irreducibility there’s in general no upper bound on how far we’ll need to go to determine this. But now to find out if a statement is false we can imagine introducing the statement as an additional axiom, and then seeing if the entailment cone that’s now produced contains an explosion—though once again there’ll in general be no upper bound on how far we’ll have to go to guarantee that we have a “genuine explosion” on our hands.

So is there any alternative procedure? Potentially the answer is yes: we can just try to see if our statement is somehow equivalent to “true” or “false”. But in our model of mathematics where we’re just talking about transformations on symbolic expressions, there’s no immediate built-in notion of “true” and “false”. To talk about these we have to add something. And for example what we can do is to say that “true” is equivalent to what seems like an “obvious tautology” such as x=x, or in our computational notation, x_x_, while “false” is equivalent to something “obviously explosive”, like x_↔y_ (or in our particular setup something more like x_x_∘y_).

But even though something like “Can we find a way to reach x_x_ from a given statement?” seems like a much more practical question for an actual theorem-proving system than “Can we fish our statement out of a whole entailment cone?”, it runs into many of the same issues—in particular that there’s no upper limit on the length of path that might be needed.

Soon we’ll return to the question of how all this relates to our interpretation of mathematics as a slice of the ruliad—and to the concept of the entailment fabric perceived by a mathematical observer. But to further set the context for what we’re doing let’s explore how what we’ve discussed so far relates to things like Gödel’s theorem, and to phenomena like incompleteness.

From the setup of basic logic we might assume that we could consider any statement to be either true or false. Or, more precisely, we might think that given a particular axiom system, we should be able to determine whether any statement that can be syntactically constructed with the primitives of that axiom system is true or false. We could explore this by asking whether every statement is either derivable or leads to an explosion—or can be proved equivalent to an “obvious tautology” or to an “obvious explosion”.

But as a simple “approximation” to this, let’s consider a string rewriting system in which we define a “local negation operation”. In particular, let’s assume that given a statement like ABBBA the “negation” of this statement just exchanges A and B, in this case yielding BAAAB.

Now let’s ask what statements are generated from a given axiom system. Say we start with ABB. After one step of possible substitutions we get

while after 2 steps we get:

And in our setup we’re effectively asserting that these are “true” statements. But now let’s “negate” the statements, by exchanging A and B. And if we do this, we’ll see that there’s never a statement where both it and its negation occur. In other words, there’s no obvious inconsistency being generated within this axiom system.

But if we consider instead the axiom ABBA then this gives:

And since this includes both ABAB and its “negation” BABA, by our criteria we must consider this axiom system to be inconsistent.

In addition to inconsistency, we can also ask about incompleteness. For all possible statements, does the axiom system eventually generate either the statement or its negation? Or, in other words, can we always decide from the axiom system whether any given statement is true or false?

With our simple assumption about negation, questions of inconsistency and incompleteness become at least in principle very simple to explore. Starting from a given axiom system, we generate its entailment cone, then we ask within this cone what fraction of possible statements, say of a given length, occur.

If the answer is more than 50% we know there’s inconsistency, while if the answer is less than 50% that’s evidence of incompleteness. So what happens with different possible axiom systems?

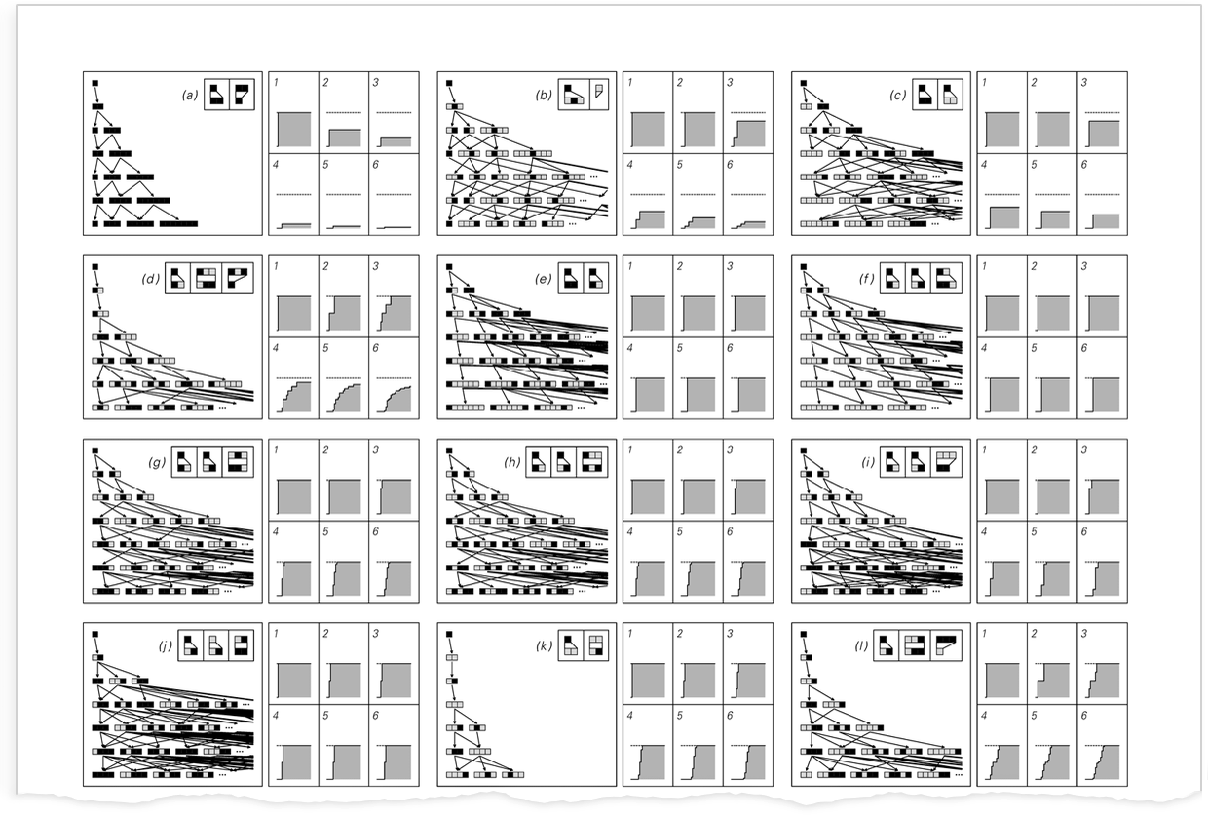

Here are some results from A New Kind of Science, in each case showing both what amounts to the raw entailment cone (or, in this case, multiway system evolution from “true”), and the number of statements of a given length reached after progressively more steps:

At some level this is all rather straightforward. But from the pictures above we can already get a sense that there’s a problem. For most axiom systems the fraction of statements reached of a given length changes as we increase the number of steps in the entailment cone. Sometimes it’s straightforward to see what fraction will be achieved even after an infinite number of steps. But often it’s not.

And in general we’ll run into computational irreducibility—so that in effect the only way to determine whether some particular statement is generated is just to go to ever more steps in the entailment cone and see what happens. In other words, there’s no guaranteed-finite way to decide what the ultimate fraction will be—and thus whether or not any given axiom system is inconsistent, or incomplete, or neither.

For some axiom systems it may be possible to tell. But for some axiom systems it’s not, in effect because we don’t in general know how far we’ll have to go to determine whether a given statement is true or not.

A certain amount of additional technical detail is required to reach the standard versions of Gödel’s incompleteness theorems. (Note that these theorems were originally stated specifically for the Peano axioms for arithmetic, but the Principle of Computational Equivalence suggests that they’re in some sense much more general, and even ubiquitous.) But the important point here is that given an axiom system there may be statements that either can or cannot be reached—but there’s no upper bound on the length of path that might be needed to reach them even if one can.

OK, so let’s come back to talking about the notion of truth in the context of the ruliad. We’ve discussed axiom systems that might show inconsistency, or incompleteness—and the difficulty of determining if they do. But the ruliad in a sense contains all possible axiom systems—and generates all possible statements.

So how then can we ever expect to identify which statements are “true” and which are not? When we talked about particular axiom systems, we said that any statement that is generated can be considered true (at least with respect to that axiom system). But in the ruliad every statement is generated. So what criterion can we use to determine which we should consider “true”?

The key idea is any computationally bounded observer (like us) can perceive only a tiny slice of the ruliad. And it’s a perfectly meaningful question to ask whether a particular statement occurs within that perceived slice.

One way of picking a “slice” is just to start from a given axiom system, and develop its entailment cone. And with such a slice, the criterion for the truth of a statement is exactly what we discussed above: does the statement occur in the entailment cone?

But how do typical “mathematical observers” actually sample the ruliad? As we discussed in the previous section, it seems to be much more by forming an entailment fabric than by developing a whole entailment cone. And in a sense progress in mathematics can be seen as a process of adding pieces to an entailment fabric: pulling in one mathematical statement after another, and checking that they fit into the fabric.

So what happens if one tries to add a statement that “isn’t true”? The basic answer is that it produces an “explosion” in which the entailment fabric can grow to encompass essentially any statement. From the point of view of underlying rules—or the ruliad—there’s really nothing wrong with this. But the issue is that it’s incompatible with an “observer like us”—or with any realistic idealization of a mathematician.

Our view of a mathematical observer is essentially an entity that accumulates mathematical statements into an entailment fabric. But we assume that the observer is computationally bounded, so in a sense they can only work with a limited collection of statements. So if there’s an explosion in an entailment fabric that means the fabric will expand beyond what a mathematical observer can coherently handle. Or, put another way, the only kind of entailment fabrics that a mathematical observer can reasonably consider are ones that “contain no explosions”. And in such fabrics, it’s reasonable to take the generation or entailment of a statement as a signal that the statement can be considered true.

The ruliad is in a sense a unique and absolute thing. And we might have imagined that it would lead us to a unique and absolute definition of truth in mathematics. But what we’ve seen is that that’s not the case. And instead our notion of truth is something based on how we sample the ruliad as mathematical observers. But now we must explore what this means about what mathematics as we perceive it can be like.