It’s been a long personal journey to get to the ideas described here—stretching back nearly 45 years. Parts have been quite direct, steadily building over the course of time. But other parts have been surprising—even shocking. And to get to where we are now has required me to rethink some very long-held assumptions, and adopt what I had believed was a rather different way of thinking—even though, ironically, I’ve realized in the end that many aspects of this way of thinking pretty much mirror what I’ve done all along at a practical and technological level.

Back in the late 1970s as a young theoretical physicist I had discovered the “secret weapon” of using computers to do mathematical calculations. By 1979 I had outgrown existing systems and decided to build my own. But what should its foundations be? A key goal was to represent the processes of mathematics in a computational way. I thought about the methods I’d found effective in practice. I studied the history of mathematical logic. And in the end I came up with what seemed to me at the time the most obvious and direct approach: that everything should be based on transformations for symbolic expressions.

I was pretty sure this was actually a good general approach to computation of all kinds—and the system we released in 1981 was named SMP (“Symbolic Manipulation Program”) to reflect this generality. History has indeed borne out the strength of the symbolic expression paradigm—and it’s from that we’ve been able to build the huge tower of technology that is the modern Wolfram Language. But all along mathematics has been an important use case—and in effect we’ve now seen four decades of validation that the core idea of transformations on symbolic expressions is a good metamodel of mathematics.

When Mathematica was first released in 1988 we called it “A System for Doing Mathematics by Computer”, where by “doing mathematics” we meant doing computations in mathematics and getting results. People soon did all sorts of experiments on using Mathematica to create and present proofs. But the overwhelming majority of actual usage was for directly computing results—and almost nobody seemed interested in seeing the inner workings, presented as a proof or otherwise.

But in the 1980s I had started my work on exploring the computational universe of simple programs like cellular automata. And doing this was all about looking at the ongoing behavior of systems—or in effect the (often computationally irreducible) history of computations. And even though I sometimes talked about using my computational methods to do “experimental mathematics”, I don’t think I particularly thought about the actual progress of the computations I was studying as being like mathematical processes or proofs.

In 1991 I started working on what became A New Kind of Science, and in doing so I tried to systematically study possible forms of computational processes—and I was soon led to substitution systems and symbolic systems which I viewed in their different ways as being minimal idealizations of what would become Wolfram Language, as well as to multiway systems. There were some areas to which I was pretty sure the methods of A New Kind of Science would apply. Three that I wasn’t sure about were biology, physics and mathematics.

But by the late 1990s I had worked out quite a bit about the first two, and started looking at mathematics. I knew that Mathematica and what would become Wolfram Language were good representations of “practical mathematics”. But I assumed that to understand the foundations of mathematics I should look at the traditional low-level representation of mathematics: axiom systems.

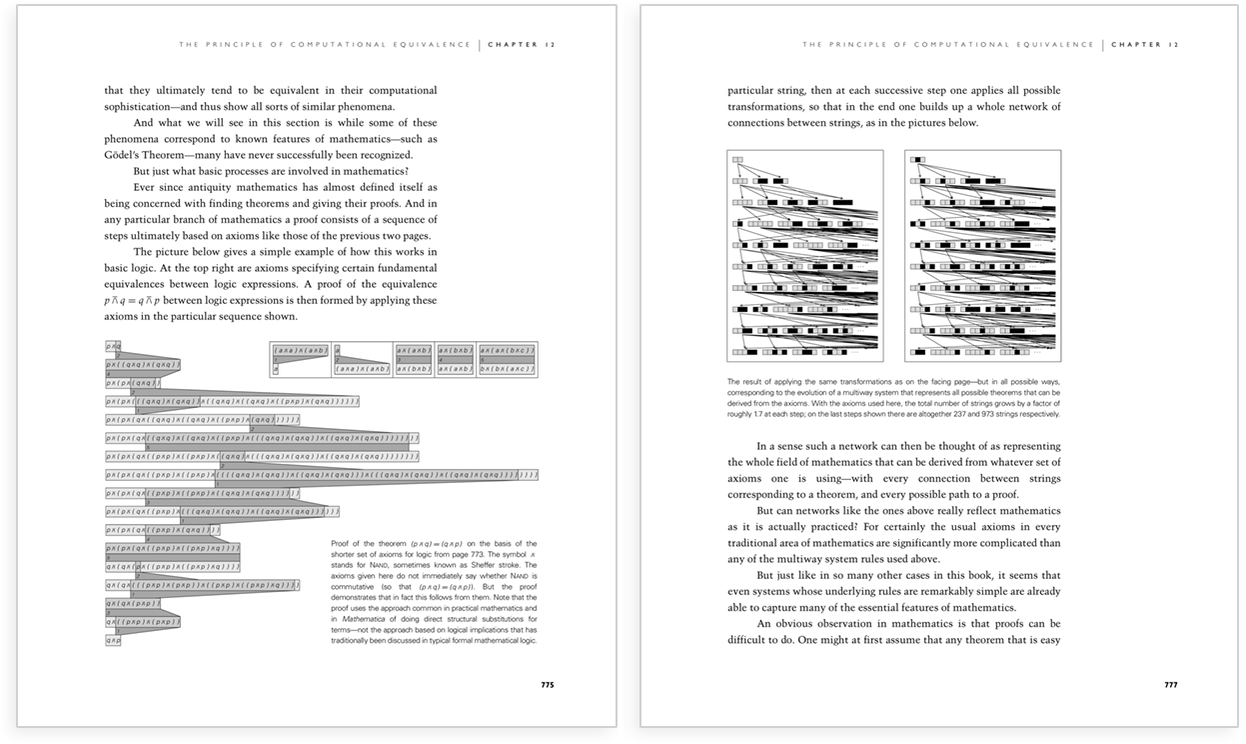

And in doing this I was soon able to simplify to multiway systems—with proofs being paths:

I had long wondered what the detailed relationships between things like my idea of computational irreducibility and earlier results in mathematical logic were. And I was pleased at how well many things could be clarified—and explicitly illustrated—by thinking in terms of multiway systems.

My experience in exploring simple programs in general had led to the conclusion that computational irreducibility and therefore undecidability were quite ubiquitous. So I considered it quite a mystery why undecidability seemed so rare in the mathematics that mathematicians typically did. I suspected that in fact undecidability was lurking close at hand—and I got some evidence of that by doing experimental mathematics. But why weren’t mathematicians running into this more? I came to suspect that it had something to do with the history of mathematics, and with the idea that mathematics had tended to expand its subject matter by asking “How can this be generalized while still having such-and-such a theorem be true?”

But I also wondered about the particular axiom systems that had historically been used for mathematics. They all fit easily on a couple of pages. But why these and not others? Following my general “ruliological” approach of exploring all possible systems I started just enumerating possible axiom systems—and soon found out that many of them had rich and complicated implications.

But where among these possible systems did the axiom systems historically used in mathematics lie? I did searches, and at about the 50,000th axiom was able to find the simplest axiom system for Boolean algebra. Proving that it was correct gave me my first serious experience with automated theorem proving.

But what kind of a thing was the proof? I made some attempt to understand it, but it was clear that it wasn’t something a human could readily understand—and reading it felt a bit like trying to read machine code. I recognized that the problem was in a sense a lack of “human connection points”—for example of intermediate lemmas that (like words in a human language) had a contextualized significance. I wondered about how one could find lemmas that “humans would care about”? And I was surprised to discover that at least for the “named theorems” of Boolean algebra a simple criterion could reproduce them.

Quite a few years went by. Off and on I thought about two ultimately related issues. One was how to represent the execution histories of Wolfram Language programs. And the other was how to represent proofs. In both cases there seemed to be all sorts of detail, and it seemed difficult to have a structure that would capture what would be needed for further computation—or any kind of general understanding.

Meanwhile, in 2009, we released Wolfram|Alpha. One of its features was that it had “step-by-step” math computations. But these weren’t “general proofs”: rather they were narratives synthesized in very specific ways for human readers. Still, a core concept in Wolfram|Alpha—and the Wolfram Language—is the idea of integrating in knowledge about as many things as possible in the world. We’d done this for cities and movies and lattices and animals and much more. And I thought about doing it for mathematical theorems as well.

We did a pilot project—on theorems about continued fractions. We trawled through the mathematical literature assessing the difficulty of extending the “natural math understanding” we’d built for Wolfram|Alpha. I imagined a workflow which would mix automated theorem generation with theorem search—in which one would define a mathematical scenario, then say “tell me interesting facts about this”. And in 2014 we set about engaging the mathematical community in a large-scale curation effort to formalize the theorems of mathematics. But try as we might, only people already involved in math formalization seemed to care; with few exceptions working mathematicians just didn’t seem to consider it relevant to what they did.

We continued, however, to push slowly forward. We worked with proof assistant developers. We curated various kinds of mathematical structures (like function spaces). I had estimated that we’d need more than a thousand new Wolfram Language functions to cover “modern pure mathematics”, but without a clear market we couldn’t motivate the huge design (let alone implementation) effort that would be needed—though, partly in a nod to the intellectual origins of mathematics, we did for example do a project that has succeeded in finally making Euclid-style geometry computable.

Then in the latter part of the 2010s a couple more “proof-related” things happened. Back in 2002 we’d started using equational logic automated theorem proving to get results in functions like FullSimplify. But we hadn’t figured out how to present the proofs that were generated. In 2018 we finally introduced FindEquationalProof—allowing programmatic access to proofs, and making it feasible for me to explore collections of proofs in bulk.

I had for decades been interested in what I’ve called “symbolic discourse language”: the extension of the idea of computational language to “everyday discourse”—and to the kind of thing one might want for example to express in legal contracts. And between this and our involvement in the idea of computational contracts, and things like blockchain technology, I started exploring questions of AI ethics and “constitutions”. At this point we’d also started to introduce machine-learning-based functions into the Wolfram Language. And—with my “human incomprehensible” Boolean algebra proof as “empirical data”—I started exploring general questions of explainability, and in effect proof.

And not long after that came the surprise breakthrough of our Physics Project. Extending my ideas from the 1990s about computational foundations for fundamental physics it suddenly became possible finally to understand the underlying origins of the main known laws of physics. And core to this effort—and particularly to the understanding of quantum mechanics—were multiway systems.

At first we just used the knowledge that multiway systems could also represent axiomatic mathematics and proofs to provide analogies for our thinking about physics (“quantum observers might in effect be doing critical-pair completions”, “causal graphs are like higher categories”, etc.) But then we started wondering whether the phenomenon of the emergence that we’d seen for the familiar laws of physics might also affect mathematics—and whether it could give us something like a “bulk” version of metamathematics.

I had long studied the transition from discrete “computational” elements to “bulk” behavior, first following my interest in the Second Law of thermodynamics, which stretched all the way back to age 12 in 1972, then following my work on cellular automaton fluids in the mid-1980s, and now with the emergence of physical space from underlying hypergraphs in our Physics Project. But what might “bulk” metamathematics be like?

One feature of our Physics Project—in fact shared with thermodynamics—is that certain aspects of its observed behavior depend very little on the details of its components. But what did they depend on? We realized that it all had to do with the observer—and their interaction (according to what I’ve described as the 4th paradigm for science) with the general “multicomputational” processes going on underneath. For physics we had some idea what characteristics an “observer like us” might have (and actually they seemed to be closely related to our notion of consciousness). But what might a “mathematical observer” be like?

In its original framing we talked about our Physics Project as being about “finding the rule for the universe”. But right around the time we launched the project we realized that that wasn’t really the right characterization. And we started talking about rulial multiway systems that instead “run every rule”—but in which an observer perceives only some small slice, that in particular can show emergent laws of physics.

But what is this “run every rule” structure? In the end it’s something very fundamental: the entangled limit of all possible computations—that I call the ruliad. The ruliad basically depends on nothing: it’s unique and its structure is a matter of formal necessity. So in a sense the ruliad “necessarily exists”—and, I argued, so must our universe.

But we can think of the ruliad not only as the foundation for physics, but also as the foundation for mathematics. And so, I concluded, if we believe that the physical universe exists, then we must conclude—a bit like Plato—that mathematics exists too.

But how did all this relate to axiom systems and ideas about metamathematics? I had two additional pieces of input from the latter half of 2020. First, following up on a note in A New Kind of Science, I had done an extensive study of the “empirical metamathematics” of the network of the theorems in Euclid, and in a couple of math formalization systems. And second, in celebration of the 100th anniversary of their invention essentially as “primitives for mathematics”, I had done an extensive ruliological and other study of combinators.

I began to work on this current piece in the fall of 2020, but felt there was something I was missing. Yes, I could study axiom systems using the formalism of our Physics Project. But was this really getting at the essence of mathematics? I had long assumed that axiom systems really were the “raw material” of mathematics—even though I’d long gotten signals they weren’t really a good representation of how serious, aesthetically oriented pure mathematicians thought about things.

In our Physics Project we’d always had as a target to reproduce the known laws of physics. But what should the target be in understanding the foundations of mathematics? It always seemed like it had to revolve around axiom systems and processes of proof. And it felt like validation when it became clear that the same concepts of “substitution rules applied to expressions” seemed to span my earliest efforts to make math computational, the underlying structure of our Physics Project, and “metamodels” of axiom systems.

But somehow the ruliad—and the idea that if physics exists so must math—made me realize that this wasn’t ultimately the right level of description. And that axioms were some kind of intermediate level, between the “raw ruliad”, and the “humanized” level at which pure mathematics is normally done. At first I found this hard to accept; not only had axiom systems dominated thinking about the foundations of mathematics for more than a century, but they also seemed to fit so perfectly into my personal “symbolic rules” paradigm.

But gradually I got convinced that, yes, I had been wrong all this time—and that axiom systems were in many respects missing the point. The true foundation is the ruliad, and axiom systems are a rather-hard-to-work-with “machine-code-like” description below the inevitable general “physicalized laws of metamathematics” that emerge—and that imply that for observers like us there’s a fundamentally higher-level approach to mathematics.

At first I thought this was incompatible with my general computational view of things. But then I realized: “No, quite the opposite!” All these years I’ve been building the Wolfram Language precisely to connect “at a human level” with computational processes—and with mathematics. Yes, it can represent and deal with axiom systems. But it’s never felt particularly natural. And it’s because they’re at an awkward level—neither at the level of the raw ruliad and raw computation, nor at the level where we as humans define mathematics.

But now, I think, we begin to get some clarity on just what this thing we call mathematics really is. What I’ve done here is just a beginning. But between its explicit computational examples and its conceptual arguments I feel it’s pointing the way to a broad and incredibly fertile new understanding that—even though I didn’t see it coming—I’m very excited is now here.